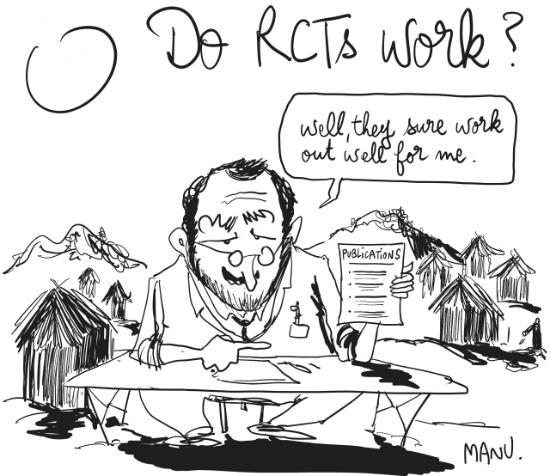

Blogs review: The popularity of Randomized Control Trials

What’s at stake: The popularity of Randomized Control Trials (RCTs) in academia has led to an impressive increase in the amounts governments and inter

The coming of age of RCTs

Joshua Angrist and Jörn-Steffen Pischke write that empirical microeconomics has experienced a credibility revolution, with a consequent increase in policy relevance and scientific impact. The primary engine driving improvement has been a focus on the quality of empirical research designs. The advantages of a good research design are perhaps most easily apparent in research using random assignment.

The Economist writes that RCTs are like drug trials for economics. If you want to discover if, say, using identity cards would improve the delivery of subsidized rice to the poor and reduce theft, then you take a collection of comparable villages or households, and randomly assign ID cards to some and not to others. Then wait to see what occurs. Anders Olofsgård writes that the recent focus on impact evaluation within development economics has led to increased pressure on aid agencies to provide evidence from RCTs. Free exchange reports that the World Bank runs RCTs. So do regional bodies like the Inter-American Development Bank. Even governments deploy them.

Good estimates from the wrong place versus bad estimates from the right place

Joshua Angrist and Jörn-Steffen Pischke write that the rise of the experimentalist paradigm has provoked a reaction about the question of external validity – the concern that evidence from a given experimental or quasi-experimental research design has little predictive value beyond the context of the original experiment. The criticism here – made by a number of authors including Heckman (1997); Rosenzweig and Wolpin (2000); Heckman and Urzua (2009); and Deaton (2009) – is that in the quest for internal validity, design-based studies have become narrow or idiosyncratic.

Lant Pritchett and Justin Sandefur write that external validity is such a big issue that OLS estimates from the right context are, at present, a better guide to policy than experimental estimates from a different context. Berk Özler explains that the variation across contexts – that Pritchett and Sandefur refer to – is more or less defined as variation in well-identified effect sizes (ES), while variation within is the difference between a not well-identified estimate and the well-identified one within the same context. In other words, if you ran an OLS of vouchers on student performance using the baseline data in your experiment that provided vouchers and compared the biased ES with the experimental one, that would be your within variation. If you compared the experimental ES in Colombia with one from Tanzania, that would be your across variation.

Abhijit V. Banerjee and Esther Duflo write that the trade-off between internal and external validity is present as well in observational studies. Joshua Angrist and Jörn-Steffen Pischke write that empirical evidence on any given causal effect is always local, derived from a particular time, place, and research design.

The role of interactions among components

Martin Ravallion writes that assessing a set of development policies and projects by evaluating its components one-by-one and adding up the results requires some assumptions that are pretty hard to accept. For one thing, it assumes that there are negligible interaction effects amongst the components. For another thing, it assumes that we can either evaluate everything in the portfolio or we can draw a representative sample, such that there is no selection bias in which components we choose to assess.

A bulletin of the World Health Organization explains that challenges in global health lie not in the identification of efficacious interventions, but rather in their effective scale-up. This requires a nuanced understanding of how implementation varies in different contexts. Context can have greater influence on uptake of an intervention than any pre-specified implementation strategy. Ann Oakley and al. write that most RCTs focus on outcomes, not on the processes involved in implementing an intervention and argue that that including a process evaluation would improve the science of many RCTs. Because of their multifaceted nature and dependence on social context, complex interventions pose methodological challenges, and require adaptations to the standard design of such trials.

Have RCTs diverted an important amount of resources?

Martin Ravallion writes that our evaluative efforts are progressively switching toward things for which certain methods are feasible, whether or not those things are important sources of the knowledge gaps relevant to assessing development impact at the portfolio level. There is a positive “output effect” of the current enthusiasm for impact evaluation, but there is also likely to be a negative “substitution effect.” As Jishnu Das, Shantayana Devarajan and Jeffrey Hammer have pointed out it is not clear that RCTs are well suited to programs involving the types of commodities for which market failures are a concern, such as those with large spillover effects in production or consumption.

Joshua Angrist and Jörn-Steffen Pischke write that related to the external validity critique is the claim that the experimentalist paradigm leads researchers to look for good experiments, regardless of whether the questions they address are important. Given, for example, that the recent economic crisis has spawned intriguing design-based studies of the crisis macroeconomics (see Gabriel Chodorow-Reich’s 2013 job market paper for example), this claim seems less relevant today than when Noam Scheider complained about an evolution epitomized by the success of Freakonomics.